On November 2, 2021, Bellingham voters have an opportunity to vote on important initiatives affecting people’s liberty and freedom. Initiative No. 2021-02 concerns the use of facial recognition technology and predictive policing technology.

Face surveillance is the most dangerous of the many new technologies available to law enforcement. This measure would prohibit the City from the following:

- Acquire or use facial recognition technology.

- Prohibit the City from contracting with a third party to use facial recognition technology on its behalf.

- Prohibit the use of predictive policing technology.

- Prohibit the retention of unlawfully acquired data.

- Prohibit the use of data, information, or evidence derived from the use of facial recognition technology or predictive policing technology in any legal proceeding.

- Authorize private civil enforcement actions.

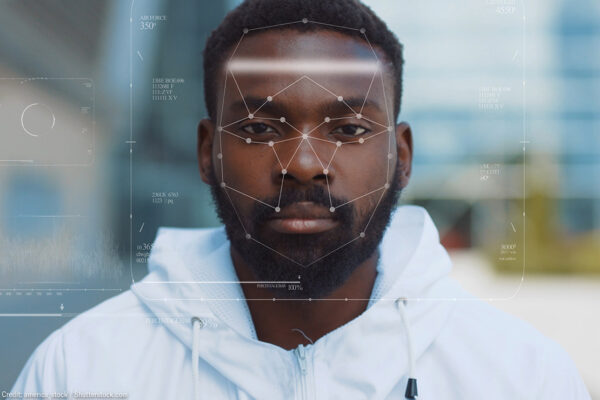

A facial recognition system is a technology capable of matching a human face from a digital image or a video frame against a database of faces, typically employed to authenticate users through ID verification services, works by pinpointing and measuring facial features from a given image.

Facial recognition systems are employed throughout the world today by governments and private companies. Their effectiveness varies, and some systems have previously been scrapped because of their ineffectiveness. The use of facial recognition systems has also raised controversy, with claims that the systems violate citizens’ privacy, commonly make incorrect identifications, encourage gender norms and racial profiling, and do not protect important biometric data. These claims have led to the ban of facial recognition systems in several cities in the United States.

According to the ACLU, facial recognition systems are built on computer programs that analyze images of human faces for the purpose of identifying them. Unlike many other biometric systems, facial recognition can be used for general surveillance in combination with public video cameras, And it can be used in a passive way that doesn’t require the knowledge, consent, or participation of the subject.

The biggest danger is that this technology will be used for general, suspicionless surveillance systems. State motor vehicles agencies possess high-quality photographs of most citizens that are a natural source for face recognition programs and could easily be combined with public surveillance or other cameras in the construction of a comprehensive system of identification and tracking.

My opinion? Vote YES on Initiative 2021-02.

The technology itself can be racially biased. Groundbreaking research conducted by scholars Joy Buolamwini, Deb Raji, and Timnit Gebru snapped our collective attention to the fact that yes, algorithms can be racist. Buolamwini and Gebru’s 2018 research concluded that some facial analysis algorithms misclassified Black women nearly 35 percent of the time, while nearly always getting it right for white men. A subsequent study by Buolamwini and Raji at the Massachusetts Institute of Technology confirmed these problems persisted with Amazon’s software.

Please contact my office if you a friend or family member are charged with a crime. Hiring an effective and experienced criminal defense attorney is the first and best step toward justice.